One of my coworkers is fond of saying all problems are hard. I'd probably add some caveats to that statement, but it's remarkably on-point when it comes to playing video on the Web. Consider for a moment the challenge of tracking the parts of a video that are popular: finding out which viewers have watched a video, for how long, and what portions they have consumed. If you check the HTML standard, there are all sorts of helpful events and information available during playback that might lull you into thinking this is one of those elusive "easy problems." Don't be fooled! Unless you're one of the lucky few who only has to worry about a standards-compliant desktop browser, you're in for a ride. Buckle up.

Brightcove Video Cloud has a real-time analytics system that tracks video playback across hundreds of different devices, operating systems and browsers. One of the really fantastic stats it collects is video engagement – basically a view count for every second of playback. It's a real handy thing to have if you're trying to figure out which parts of your content your viewers are getting most excited about. It's a fairly easy statistic to describe, so you might imagine it would be fairly easy to code up. Not quite. You'll have to contend with limited bandwidth, battery and processing power on mobile devices; the asynchronous nature of video playback and viewers' attention spans; and partial, broken and conflicting video implementations.

The first step in building a video analytics reporter is to start keeping track of what seconds have been viewed during regular playback. The obvious solution would be to just report an "engagement" event back to the backend analytics system every second during playback. That would probably work like gangbusters but I don't blame you if you flinched reading that last sentence. Sending an HTTP request every second is a pretty significant waste of battery and bandwidth on a mobile phone. As usual, the solution is to get a bit more clever. By batching up engagement events, they can be reported at a more reasonable frequency. After that tiny optimization, we have something that looks like it's doing interesting work and is still pretty simple. If our audience never paused or seeked during playback, we'd be done! Unfortunately, they do and that's where things start getting tricky.

Pausing is the least complicated of the two, so let's get that out of the way next. Now that we're batching up the viewing time before reporting it, we've allowed the information in the browser to get significantly out of sync with our backend. If someone watches nine seconds of video, pauses it and never returns, we'll never know about a pretty significant chunk of viewing time. Since this is a fairly common occurrence, we can just flush our currently tracked engagement every time playback pauses and be reasonably sure we're not losing tons of data. We're using up more of the user's bandwidth for tracking but we're eliminating a big source of inaccuracies. Seeking will require a more nuanced solution.

The HTML5 spec defines a seeking and seeked event but it interacts poorly with how the current playback position is calculated for our purposes. Ideally, we'd be able to capture the time at the start of the seek so we could report any engagement we've been tracking up to this point and start collecting data afresh once the seek is finished. The API to initiate a seek, however, requires that the current time be directly overwritten and there's no good way to find out the actual time while the seek is in progress. We can address this by keeping track of the value of currentTime at the last timeupdate event but we'll have to be careful multiple seeks in quick succession or a timeupdate while a seek is still in progress don't reuse the old value. That's hopefully just a matter of bookkeeping though, and now we have a video engagement reporting tool that works great until we try it out on that Android 2.x device that your less tech-savvy relatives refuse to upgrade.

Many older devices don't fire seeking events at all. We could try to address this with some sort of hybrid solution that uses a seek-event-based strategy in most situations and fell back to something less pretty on platforms where the event can't be relied on. I would worry that our fallback mechanism would end up being fragile, however, and it feels like maintaining two code paths instead of one. A natural question to ask would be, is it possible to detect seeks reliably without an event at all? A minute of pondering might bring you to the realization that a big gap in the currentTime between two timeupdates might be a seek. Formalizing that intuition is a bit involved.

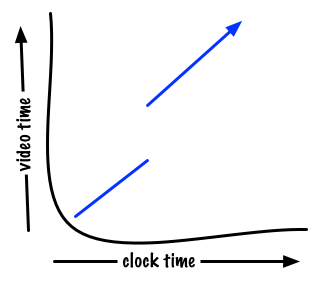

In the abstract, we can think of currentTime as a function that we're sampling during playback. If we had access to its value at all points in time, seeks would be very obvious discontinuities in the graph:

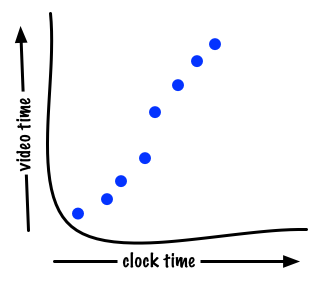

In reality, we're actually dealing with a bunch of unevenly-spaced dots moving upward from the bottom left to the top right at approximately a 45-degree angle. Something like this:

Definitely trickier. If we imagine drawing a line connecting all those dots, a seek would be times where the angle of the line is significantly more vertical than horizontal. If you're feeling bold enough to dredge up memories of undergrad calculus, you might even say seeks are those periods where the derivative of the function is significantly greater than one. Framing it as a calculation of the derivative is nice because there are a lot of well-tested techniques to apply to the problem, whereas a Google for "html5 detect video seeks" is underwhelming.

This approach is not without complications. The dots on our imaginary graph aren't going to be evenly spaced. We'll also have to consider whether it's safe to assume that the browser is able to consistently play back content in "real-time." Measurements taken on a variety of platforms indicate that at least at the start of playback, the video timeline may be progressing much more slowly than your wall clock. Finally, it's worth questioning whether we can trust the video element's reported time. On many platforms, playback is actually performed by dedicated hardware which may trade off measurement accuracy for speed; similar trade-offs or caching could occur at any of the layers between the circuitry and the viewer's browser. Any of these factors can introduce noise into our measurements and cause us to incorrectly detect a discontinuity in our idealized currentTime function.

Given all those complications, how can we estimate this derivative over time? With a fixed polling interval, it would be sufficient to set a threshold on the absolute difference in measurements and report a seek whenever that threshold was exceeded. If we wanted to be really robust, we could use a technique like Savitzky-Golay to handle inaccuracies in the reported sample values without introducing a ton of computational overhead.

With a non-uniform polling interval though, we're forced to look for more general techniques. A straight-forward one is to look at the ratio of the differences between the video time and clock time measured at the last timeupdate event and the current moment. Put somewhat more simply: we're dividing the elapsed video time by the elapsed real time. What we're basically doing here is calculating the derivative of video position between the last measurement and the current moment, ignoring any historical information we might have gathered before then. It turns out this is a well-known technique in other engineering disciplines called the forward difference. The forward difference is fast to calculate but is known to amplify perturbations in the input signal. If the ratio is close to zero, it's particularly bad. In that case, almost all of the estimate is a product of noise in the input instead of the actual signal we're trying to describe. In the video realm, we get a free out: we don't care about periods when the video timeline is progressing slower than real-time, in fact we'd prefer to ignore them.

To iron out any remaining noise, we could mix in historical information to our piecewise derivative calculation. Given we're modeling a mostly linear function, we could pretty easily apply a classic algorithm like least-squares fit. I'd love an excuse to code that but it's probably more complex than required. An alternative that works really well in practice is just to ignore timeupdates that occur too close to one another in time, where the error in measurements will get amplified. Combined with ignoring derivatives lower than one, we've put together a robust seek-detector that only relies on timeupdate events and can cue us when engagement tracking should be flushed. Even on platforms that don't reliably support the timeupdate event, we can simulate them with setTimeout. Not too shabby!

If you're anything like me, the level of complexity in pulling together a cross-platform video analytics reporter is kind of shocking. HTML5 and the introduction of the video element on our desktops and mobile devices has opened up internet video to an unprecedented proportion of the world's population. Unfortunately, we're still working with programming models inspired by the buttons on the front of a VCR. I don't think I'm stretching too much by saying we're in the pre-GOTO considered harmful days of video player APIs. We do have a strong foundation in the HTML standard however, and I'm inspired by the huge opportunity to invent new frameworks to tackle these problems. If doing a flavor of concurrent programming without any synchronization primitives intrigues you, help us out! Maybe one day we'll discover one of those "easy problems."